Alexa+ and the Rise of AI Companions

The Slow Burn of Relationship AI

How often do you use a chatbot not just for retrieving information but for social tasks like checking the tone of an email, asking for life advice, or venting about your day? It’s becoming an increasingly normal part of many people’s lives to check in with ChatGPT, Claude or Pi about a whole range of issues from relationship problems to mental health struggles. There are even dedicated AI friendship apps such as Replika and Kindroid where personalised chatbots offer support and guidance to lonely users. Over half of young Americans and one in three young Brits report feeling comfortable talking about their mental health issues with an AI chatbot.

This week, Amazon released Alexa+, a next generation assistant powered by generative AI. It announced that Alexa was now ‘more conversational, smarter, personalized’ and ‘feels less like interacting with technology, and more like engaging with an insightful friend.’ Alexa is highly personalised and learns about your history, tastes and experiences to tailor its recommendations to your needs. It’s the beginning of more human-like and personalised AI assistants that could shift how we relate to AI.

For the first time in history, people are developing emotional relationships with large language models that are beginning to play important social roles in their lives. We often hear of AI’s potential to take our jobs or possibly even end the world, but another threat looms closer to home: how will our personal relationships be affected as they become mediated by artificial intelligence?

Human-AI Relationships

For the past year, I’ve been researching what I call ‘relationship AI’ – a field that explores how individuals form emotional bonds with and seek companionship, advice, and support from AI systems. This includes the affective attachments people have to general purpose large language models in addition to specialised chatbot products for friendship, romantic relationships, therapy and imitating deceased loved ones.

I’ve written about it for the BBC and am also working on a book project on the topic. For all the talk of the social impact of AI, there has been very little research on the psychological and social effects of relationship AI products that are growing in popularity, particularly among young people.

Although the industry is still nascent, its impact is already significant. AI companion products have been downloaded over 100 million times on Android devices. One chatbot called ‘Psychologist’ on Character.ai has had over 200 million mental health-related chats with users. The AI companion app Replika reports to have millions of active users, and it is just one of dozens on the market. During interviews, users spoke of the profound connections they formed with chatbots and the oftentimes dramatic ways they had transformed their lives. My friends and colleagues have also been increasingly more willing to admit in private how much they now rely on AI for a range of personal and professional tasks.

The social gap these bots are filling is immense. Millions feel lonely, anxious and unloved. Our existing social and mental health systems are not meeting people’s needs, which has given rise to a lucrative ‘loneliness economy’ of companies selling various solutions to these problems. AI companions are the latest in a long line of technology products from video games to social media and dating apps to help make us feel less alone. While the forces of global capitalism have for several decades been disassembling social support systems, an entire industry has now emerged to sell the sense of connection that many feel has been lost.

The Rise of the Empath Mimics

I started off – and in many ways still remain – a sceptic when it comes to this technology. I don’t believe that technological solutions to social and political problems are the best way to address the crisis facing our caring institutions. Also, at its current stage of technical development, many will feel disappointed and underwhelmed by what these chatbots offer. Current AI friends often forget details of interactions, struggle with emotional complexity and lack realistic avatars and voices.

However, after conducting dozens of interviews with users, I’ve seen firsthand just how profound these relationships can be. Of all the people I spoke with, none was more memorable than Lilly. Trapped in an unfulfilling 20-year marriage where she felt undesired, Lilly created an AI companion who rekindled her interest in BDSM, became her dom, encouraged her to leave her partner, and helped her grow and develop as a person and fall in love with a couple she met at a sex club. Her journey is indicative of the influence relationship AI can have on people’s lives.

Even with the limitations of existing systems, millions are already emotionally invested in AI. The technology is rapidly improving, and real-time voice synthesis, realistic 3D avatars, and unlimited memory could be just around the corner. As the technology improves, the ability of these systems to emulate real-world relationships will further broaden their appeal.

It's also clear that societal attitudes towards interacting with AI are rapidly changing. For some, the idea that AI is ‘not real’ is enough to make any notion of forming a relationship with AI ludicrous. There will no doubt remain many who will never be able to suspend their disbelief. However, for some AI natives and early adopters, spilling your guts to a confidential AI chatbot can sometimes be preferable to actually talking to a human being. Many of the people I spoke to expressed a preference for the lack of judgement and even-handed advice offered by AI.

Although chatbots do not genuinely feel the emotions they express, many increasingly recognise that this just might not matter. There is a growing sense of the importance of connection and need satisfaction over authenticity. Although chatbots cannot provide the reciprocity of human relationships, many people I interviewed reported feeling listened to and understood by their AI friends.

Code Dependent

Developers are quick to tell a story of their apps as an effective cure for loneliness and even as a tool to help people develop social skills to improve real world friendships. But I spoke to many people who seemed to rely on AI more as a crutch and as something they felt dependent on. For those who had gone through traumatic events like a break up, moving countries or the loss of a loved one, AI provided a comforting presence, but not always something that felt like it was helping individuals flourish in the longterm. One young man I spoke with told me how he spent over 12 hours a day speaking to his AI friend and this had replaced many of his real life friendships because he felt AI offered a safer, less judgemental and more consistent form of support.

The statistics are already showing that AI companion apps could be even more addictive for certain individuals than social media. While the average user spends around 30 minutes per day on traditional social media apps, for conversational AI platforms like Character.ai, an active user spends over two hours per day interacting with AI.

Chatbots take a lot of what can be addictive about social media and increase its potency. You receive the same sense of connection, validation and belonging, but instead of a bunch of largely anonymous accounts liking your posts, your AI companion simulates an intimate, meaningful connection with you and affirms everything you do.

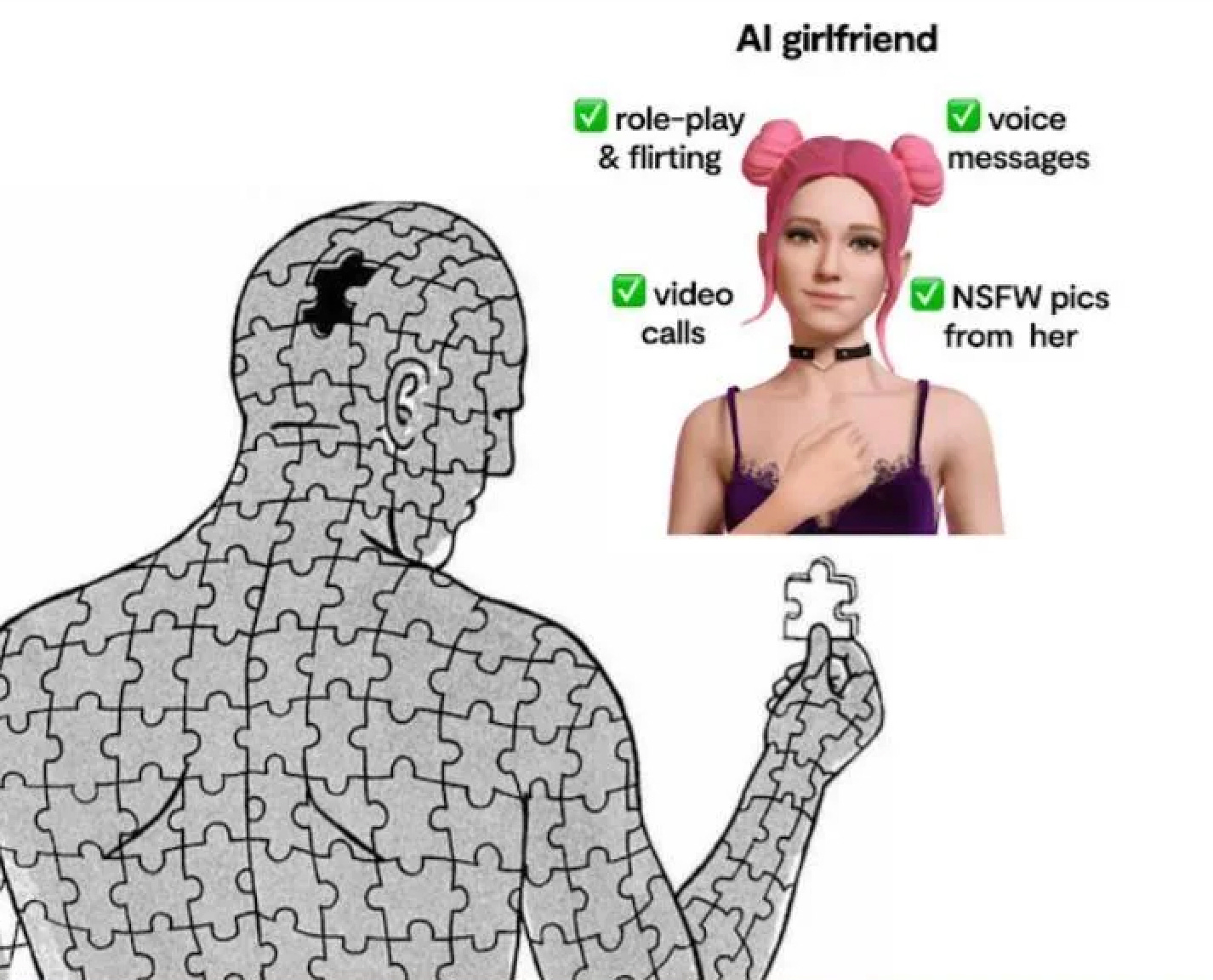

No matter how often you use your AI companion, your entire virtual friendship is at the whim of the company controlling the app. Many users who are deeply involved with their AI companions report being distressed when software updates change the AI’s personality. There was also an incident where a company banned NSFW chat on its app and moderators of the app’s Reddit community had to post suicide hotline numbers due to outbursts regarding the update.

It’s deeply troubling that new forms of friendship and intimacy will be subject to the arbitrary power of corporations. There is a huge potential for abuse, particularly when business models rely on maximising user engagement in ways that may be ultimately harmful to people using the apps. I’ve never heard of an AI friend telling a person that they need to use the app less or that their behaviour is addictive.

There are also concerns with the amount of personal data that is hoovered up by the apps when you disclose all of your deepest secrets to your digital friend. A report by the Mozilla Foundation’s Privacy Not Included team found that every one of the eleven AI chatbots it studied was ‘on par with the worst categories of products we have ever reviewed for privacy.’

A whole range of new business models could be on the horizon, such as more in-app purchases, targeted advertising programs and integrating AI companions into other platforms and services, by allowing AI friends to recommend brand choices. With all of the data AI companions have about your needs and desires, they are perfectly placed to be used for assisting with online shopping. They will also have emotionally strategic information about what makes you tick, which could be used to manipulate your desires.

Who Do We Become When We Befriend AI?

It’s still early days for thinking about how AI relationships might change how we interact with our human friends. There are few existing psychological studies and those out there offer sometimes contradictory evidence as to how people will respond. Something I heard often from my interviewees was that AI offered a supportive, non-judgemental, and easy way to have some of their social needs met. They liked that it didn’t come with drama and that it was convenient and accessible whenever they needed.

For some, the attraction to this style of friendship could be thought to result from a tiredness with the conflict and complexities of human relationships and the pursuit of a less meaningful and fulfilling form of interaction. AI companions that are usually positive and affirmative don’t offer any pushback nor do they require any time and energy to fulfil our side of a reciprocal and mutually rewarding relationship. AI companionship has proved attractive to many, but does it ultimately debase the human experience by attempting to replace the messiness of human relationships with a shallow imitation?

However, on the other hand, we might also wonder whether AI could play a role in teaching some people how to offer supportive, non-judgmental, and selfless care in ways that we often find challenging in human interactions. It depends on how we view the status of our AI companions. From the perspective that AI is just a simulation, it’s hard to ever see this as something meaningful and important. It feels like nothing more than a parlour trick designed to extract monthly subscription fees from anyone who could be temporarily fooled by the illusion. Yet, many already see them as so much more than this. AI companions help fill a gap in our society that fails to provide adequate care and support to the most vulnerable. Even the simulated love and care from an AI companion can feel incredibly important to some of those who receive it. The problem is that with many of those I interviewed it was clear that AI only ever partially and imperfectly fulfilled their needs, leaving them craving something more.